Predicting long-term climate trends is possible with the help of artificial intelligence

NeuralGCM for a mid-latitude wind balance: Performance beyond five years of long-term simulations with a locally-trained atmospheric model

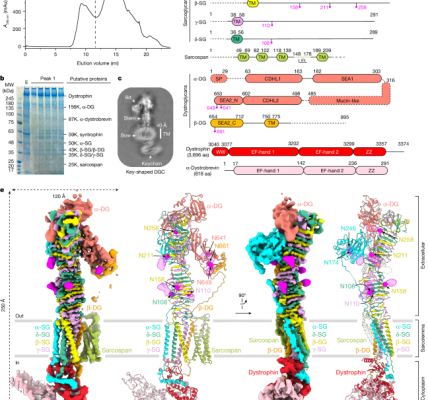

Physically consistent weather models should still perform well for weather conditions for which they were not trained. We expect that NeuralGCM may generalize better than machine-learning-only atmospheric models, because NeuralGCM employs neural networks that act locally in space, on individual vertical columns of the atmosphere. We compare NeuralCGM-0.7 and GraphCast trained to 2017: in five years of weather forecasts, with the untrained version. NeuralGCM doesn’t show a trend of increasing error when the code is further away from the training data. To extend this test beyond 5 years, we trained a NeuralGCM-2.8 model with only data before 2000 and tested it for over 21 unseen years.

We use ECMWF’s ensemble (ENS) model as a reference baseline as it achieves the best performance across the majority of lead times12. We assess accuracy using (1) root-mean-squared error (RMSE), (2) root-mean-squared bias (RMSB), (3) continuous ranked probability score (CRPS) and (4) spread-skill ratio, with the results shown in Fig. 2. scorecards and metrics for additional variables and levels are included in the Extended Data figs.

We looked at the extent to which the dominant forces that drive the dynamics of a mid-latitude wind balance can be captured by NeuralGCM, GraphCast and ECMWF-HRES. According to a recent study, Pangu alters the vertical structure of the winds, thus making them appear older than they are. GraphCast shows an error that gets worse with lead time. NeuralGCM was more accurate in depicting the vertical structure of the winds, compared with GraphCast, when used against the ERA5 data. However, ECMWF-HRES still shows a slightly closer alignment to ERA5 data than NeuralGCM does. Within NeuralGCM, the representation of the geostrophic wind’s vertical structure only slightly degrades in the initial few days, showing no noticeable changes thereafter, particularly beyond day 5.

“Traditional climate models need to be run on supercomputers. The model is so fast that it can be run in minutes, according to study co-author Stephan Hoyer.

Scott Hosking is a researcher at the Alan Turing Institute in London and he says that the issue with machine-learning is that it is only training on data already seen. The machine-learning models have to adapt into the unknown because our climate is continuously changing. By bringing physics into the model, we can ensure that our models are physically constrained and cannot do anything unrealistic.”

Hoyer and his colleagues are keen to further refine and adapt NeuralGCM. “We’ve been working on the atmospheric component of modelling the Earth’s system … It’s perhaps the part that most directly affects day-to-day weather,” Hoyer says. He says the team wants to improve the model by adding more aspects of Earth science into future versions.