What have we really learned about Glasses and Speakers in AI Hardware? And what do we want to see in next generation AI devices, and how to use them?

The last 48 hours have been a wild rollercoaster ride for AI hardware. During its I/O keynote, which was roughly two hours in length and had a lot of references to Artificial intelligence, GOOGLE said it was going to make its own set of glasses. That included flashy partnerships with Gentle Monster and Warby Parker, as well as the first hands-on opportunity with its prototype glasses for the developers and the majority of tech media alike. On the ground, it was among the buzziest things to come out of Google I/O — a glimpse of what Big Tech thinks is the winning AI hardware formula.

I suspected that this meant a mini portable speaker scenario after the news broke. Then earlier today, notable supply chain analyst Ming-Chi Kuo claimed that the current prototype is “slightly larger than the AI Pin, with a form factor as compact and elegant as an iPod Shuffle.” A potential use case involves you wearing it around the neck, that there’ll be cameras and microphones, and that it will connect with smartphones and computers.

Multimodal Artificial Intelligence can be achieved if these ingredients are used: a device that can see what you see, access a large language Model, be with you wherever you go, and last long enough to be useful in a variety of scenarios. Big Tech has mostly focused on wristwatches, especially glasses and pins. The average person will want a display, but most players are not sure about that. So far, Ive and Altman don’t seem to think so.

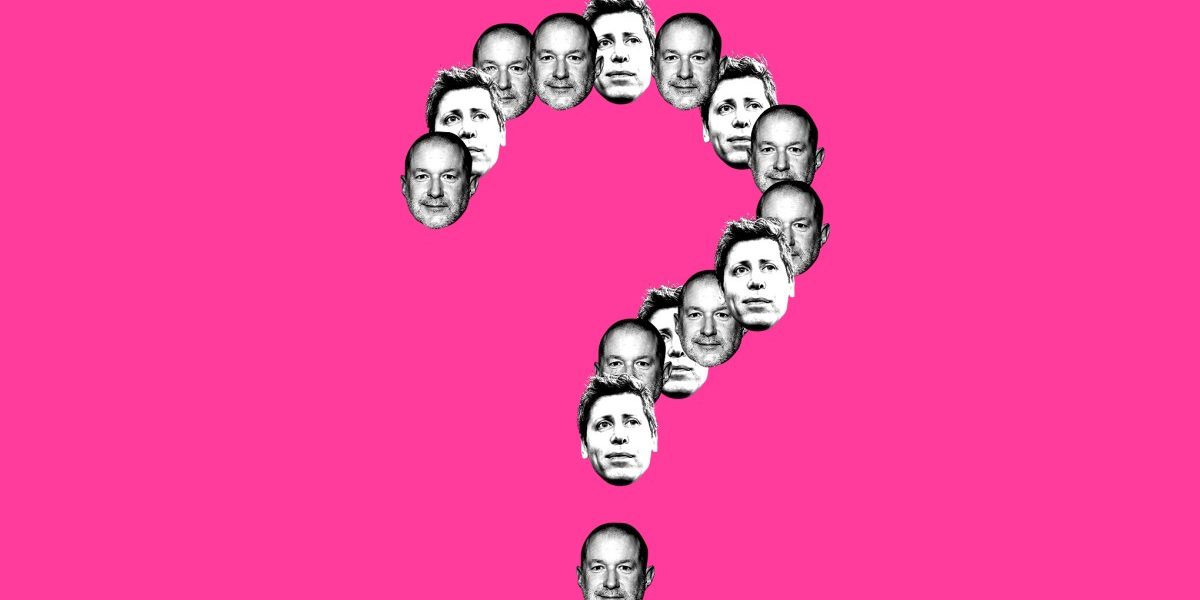

Right now, we’ve entered what I’d call the spaghetti phase of AI hardware. Bigger tech companies are throwing things at the wall to see what sticks. Silicon Valley wants generative AI on your devices. It’s just that no one agrees on what’s the best approach, or what people would actually pay for and use. We all are trying to guess who will crack the code in terms of form factor, company, and use cases while it is not murder suspects, rooms, or weapons. Is it Samsung with Project Moohan in your living room as you ask Gemini to take you to Tokyo? Meta with its Ray-Ban glasses on a thailand beach as the liveai feature translate drinks menu? Plaud or Bee would be a good choice for summing up the action items from your meeting. Or maybe it’s Ive and Altman — with whatever this prototype will do in whatever scenarios we’re meant to use it.

Here’s what we know so far. In a leaked call reviewed by The Wall Street Journal, Altman told OpenAI staffers it’s not a phone, or glasses — the form factor that Meta and Google are betting big on. Ive wasn’t keen on a device that had to be worn. It would be part of a “family of devices,” screenless, and a “third core” gadget outside of your phone and laptop. It’s something that can be stuck into your pocket but also displayed on your desk. Altman has described the prototype as one of the coolest pieces of technology ever, while Ive also threw shade at the Humane AI Pin and the Rabbit R1, the two biggest AI hardware flops of 2024.

is a senior reporter focusing on wearables, health tech, and more with 13 years of experience. Before coming to The Verge, she worked for Gizmodo and PC Magazine.

There were several interesting announcements at Build this week, including Elon Musk’s Grok model coming to Azure and Microsoft’s bet on how to evolve the plumbing of the web for AI agents. The company was overshadowed by the protesters who kept disrupting its keynotes to protest the business it does with Israel. Microsoft tried to stop employees from sending email with “Palestine”, “Gaza” and “Genocide” written on it.

The company held an event on Thursday in San Francisco to debut its Claude 4 models , which it claims are the world’s best for coding. With OpenAI, Google, and Meta all battling to win the interface layer of AI, Anthropic is positioning itself as the model arms dealer of choice. Windsurf, which is in talks to sell to Openai, appears to have been left out of getting access to the new models. “If models are countries, this is the equivalent of a trade ban,” wrote on X . What does it say about the state of the industry when the lab claims to be a safety-first lab but is releasing models that would want to blackmail people?

What OpenAI, Google, and LoveFrom can learn about a Post-Phonolgino World: a View from Sam Altman

ChatGPT is undoubtedly eating into search (it’s impossible to get Google execs to comment on the actual health of query volume), but Google has shown a willingness to modernize search faster than I expected. The situation is different from Apple, which is not competitive in the model race and is suffering from the kind of political infighting that drove up prices of various products.

There is no doubt that the frontier of model development will continue to be led by Google. The latest Gemini models are very good, and Google is clearly positioning its AI for a post-phone world with Project Astra. The Veo video model is impressive and the company has the compute to roll it out.

I ended his consulting relationship with Apple in 2022, a year before we met. Ive was able to make a lot of money but he wasn’t able to work on products that could compete with Apple. I expect to announce a voice-first device later next year with the help of Ive and Altman.

Evans Hankey and Tang Tan were among the 55 people who will be working for OpenAI, which is paying $6.5 billion in equity. They will report to Peter Welinder, a veteran OpenAI product leader. The rest of LoveFrom’s designers, including legends like Mike Matas, are staying put with Ive, who is currently designing the first-ever electric Ferrari and advising the man who introduced him to Altman, Airbnb CEO Brian Chesky. Ive and LoveFrom will assume deep design and creative responsibilities within OpenAI.

The leaders of OpenAI and Google have been living rent-free in each other’s heads since ChatGPT caught the world by storm. Last year at the I/O, OpenAI showcased advanced voice mode, but this year many were wondering if Sam Altman would try to do the same.

As always, I welcome your feedback, especially if you have thoughts on this issue, an opinion about stackable simulations, or a story idea to share. You can either ping me securely or respond here.