World first: brain implant lets man speak with expression and sing [World first: embedding into a man’s speech in a real time speech signal]

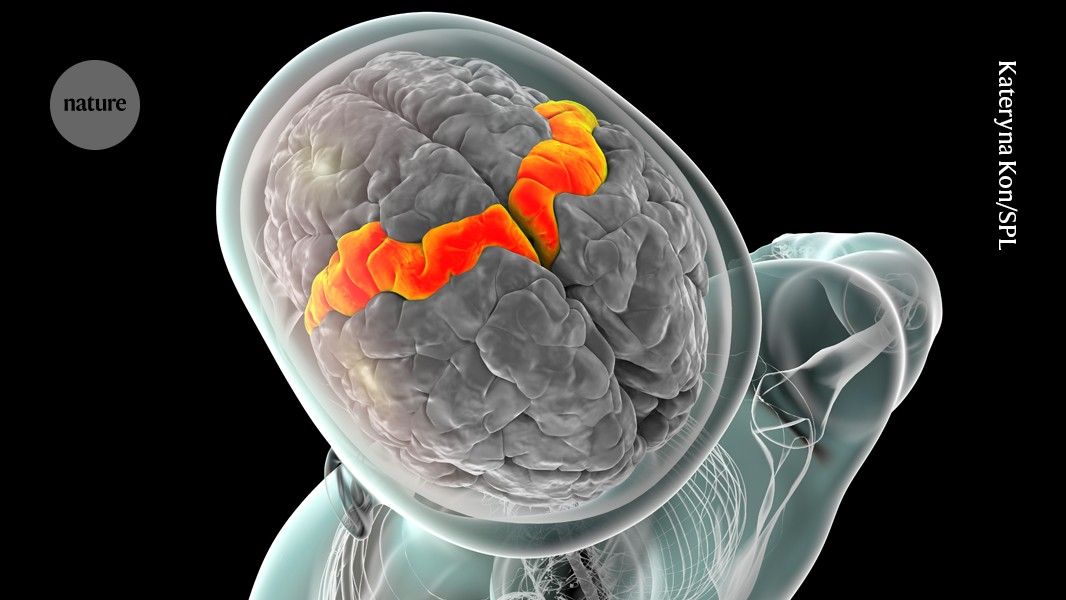

The participant underwent surgery five years after his symptoms started to cause problems in his brain region. Study co-author Maitreyee Wairagkar, a neuroscientist at the University of California, Davis, and her colleagues trained deep-learning algorithms to capture the signals in his brain every 10 milliseconds. They use real time signals to decipher the sounds the man attempts to produce instead of his intended words.

“This is the holy grail in speech BCIs,” says Christian Herff, a computational neuroscientist at Maastricht University, the Netherlands, who was not involved in the study. “This is now real, spontaneous, continuous speech.”

The study participant, a 45-year-old man, lost his ability to speak clearly after developing amyotrophic lateral sclerosis, a form of motor neuron disease, which damages the nerves that control muscle movements, including those needed for speech. His speech was unclear and slow, as he could still make sounds and mouth words.

Source: World first: brain implant lets man speak with expression ― and sing

Speech Synthesis from Biosignal and Neural Signals in Trials of Disease: An Empirical Analysis of Neurodemonstration and a Case Study of First Speech

Words are sometimes used to communicate what we want. There are interjections we have. There are other vocalizations that aren’t in the vocabulary. The approach we have taken is completely unrestricted and allows us to do that.

The team also personalized the synthetic voice to sound like the man’s own, by training AI algorithms on recordings of interviews he had done before the onset of his disease.

a. Three example trials’ audio recording, audio spectrogram, and the spectrograms of the two most acoustic-correlated neural electrodes. The examples show three types of speech tasks. The prominent spectral structures in the audio spectrogram cannot be observed even in the top two most correlated neural electrodes. An increase in neural activity can be observed before speech onset for each word, reflecting speech preparatory activity and further arguing against acoustic contamination. The last word in the word emphasis example, going, is not vocalized fully, yet there is an increase in neural activity that is similar to other words. Contamination matrices and statistical criteria are shown in the bottom row, where P-value indicates whether the trial is significantly acoustically contaminated or not. There is a b. An example trial of attempted speech with simultaneous recording of intracortical neural signals and various biosignals measured using a microphone, stethoscopic microphone and IMU sensors (accelerometer and gyroscope). The speech was synthesised using the biosignals, or all three of them. c. Intelligible speech could not be synthesized from biosignals measuring sound, movement, and vibrations during attempted speech. The Pearson correlation coefficients are cross-validated. Neural signals, biosignals, and all biosignals make up speech that is synthesised using a target speech. Reconstruction accuracy is significantly lower for decoding speech from biosignals as compared to neural activity (two-sided Wilcoxon rank-sum, P = 10−59, n = 240 sentences). (Right) Distribution of Pearson correlation coefficients of speech decoding from biosignals and neural signals are mostly non-overlapping, indicating that synthesis quality from biosignals is much lower than that of neural signals. Neural activity and biosignals are used in the test of intelligibility of voice synthesis. Neural decoding had a median error rate of 42%, which was less than the 100% error rate for stethoscope recordings. This shows that non-neural bio signals can’t decode intelligible speech.